At its heart, a Neural Network is a computational system inspired by the structure and function of the human brain. Imagine a vast, interconnected web of neurons, or nodes, organized in layers.

These nodes receive inputs, process them, and pass the results to the next layer, much like a thought forming and rippling through our minds. It's a method within the broader field of artificial intelligence designed to recognize patterns.

The purpose? To enable machines to learn from data, identify complex relationships, and make intelligent decisions with minimal human intervention. The global neural network software market reflects this growing importance.

As Allied Market Research reports, the global neural network market between 2021 and 2030 is rising at a CAGR of 26.7%. This isn't just a fleeting trend; it's a fundamental shift in how we approach problem-solving.

And if you’re ready to embrace the future, we have everything you need to begin with this blog. Let’s begin!

Types of Neural Networks

Just as a seasoned author employs various literary devices, the world of AI utilizes different types of neural networks, each suited to particular tasks. Understanding these distinctions is key to appreciating their diverse capabilities.

While there are many specialized architectures, let us acquaint ourselves with some of the most prominent players:

| Neural Network Type | Key Characteristic/Use |

|---|---|

| Artificial Neural Networks (ANNs) / Feedforward Neural Networks | These represent the simplest architecture where information flows unidirectionally, primarily used for classification and regression. |

| Convolutional Neural Networks (CNNs) | CNNs are expert systems for processing visual data like images and videos by learning spatial feature hierarchies. |

| Recurrent Neural Networks (RNNs) | RNNs feature a form of memory by feeding previous outputs back as inputs, making them suitable for sequential data tasks. |

| Long Short-Term Memory (LSTM) Networks | LSTMs are advanced RNNs specifically designed to retain information over extended periods for complex sequence processing. |

| Generative Adversarial Networks (GANs) | GANs consist of two competing networks, a generator and a discriminator, that work together to create highly realistic data. |

1. Artificial Neural Networks (ANNs) / Feedforward Neural Networks: These are the simplest type, where information flows in only one direction—from input to output. They are the bedrock, often used for classification and regression tasks. Think of them as a straightforward narrative, clear and direct.

2. Convolutional Neural Networks (CNNs): When it comes to processing visual data – images and videos – CNNs are the virtuosos. They use special layers called convolutional layers to automatically and adaptively learn spatial hierarchies of features. If an ANN is a simple story, a CNN is a richly illustrated one, capturing every visual nuance. These are instrumental in computer vision.

3. Recurrent Neural Networks (RNNs): These networks possess a kind of memory. The output from previous steps is fed back into the input for the current step, making them ideal for tasks involving sequential data, like speech recognition or natural language processing. They understand context and sequence, much like a story where past events influence the present.

4. Long Short-Term Memory (LSTM) Networks: A special kind of RNN, LSTMs are designed to remember information for longer periods, overcoming some of the limitations of traditional RNNs. They are adept at handling long sequences, making them powerful tools for complex language tasks.

5. Generative Adversarial Networks (GANs): Imagine two networks, a generator and a discriminator, locked in a creative duel. The generator tries to create realistic data (like images or text), while the discriminator tries to distinguish between real and fake data. This adversarial process results in remarkably high-quality outputs. It's like a writer and a critic constantly pushing each other to achieve perfection.

As we explore the diverse landscape of mobile applications and software, we often encounter these networks in action, especially in digital solutions leveraging AI, signifying AI’s role in personalization. The tech often leverages these very architectures to create smarter, more intuitive user experiences.

Why are Neural Networks Important?

“Any article, any news, or any website that you have on the internet probably has been seen by the model, and the model has been trained on this using the neural network or deep learning.”

~Ratnakar Pandey, AI & Data Science Consultant

The significance of neural networks in our modern era can scarcely be overstated. They are not merely academic curio

sities but powerful engines driving tangible change across industries. Their importance stems from a confluence of remarkable capabilities.

For instance:

1. Ability to Learn from Complex Data: Neural networks excel at discerning patterns in vast and often messy datasets that would overwhelm human analysts or traditional algorithms. These networks can find the proverbial needle in a digital haystack.

2. Adaptability and Fault Tolerance: Like a seasoned storyteller who can adjust a tale to suit the audience, neural networks can adapt to new, unseen data after being trained. They also exhibit a degree of fault tolerance; the malfunctioning of a few nodes doesn't necessarily cripple the entire system, thanks to their distributed nature.

3. Automation of Tasks: These networks can automate tasks that previously required human intelligence and intuition, from medical diagnosis support to financial fraud detection. This frees up human capital for more creative and strategic endeavors.

4. Continuous Improvement: The more data a neural network is exposed to (during its training phase), the better it generally becomes at its designated task. This capacity for continuous learning is a hallmark of their power.

5. Driving Innovation: From self-driving cars to personalized medicine, neural networks are at the forefront of many groundbreaking innovations. They are, in essence, a key catalyst for the ongoing technological revolution.

The benefits of Neural Network technology are manifold, enabling us to tackle problems once deemed too complex or too subtle for machines.

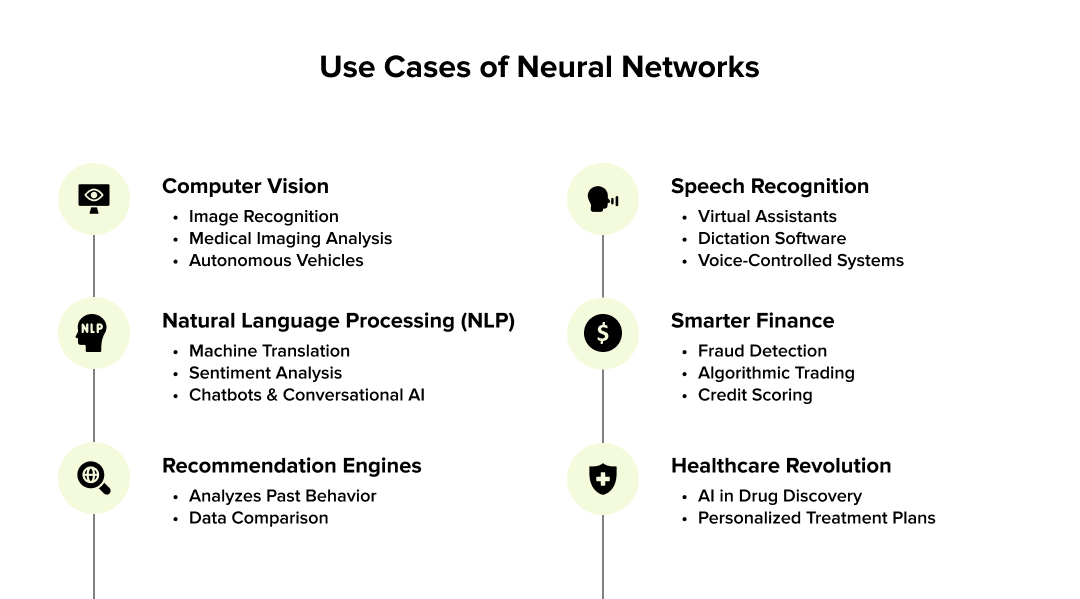

Neural Networks Use Cases

To truly grasp the impact of neural networks, let us consider some neural network examples inspired by real life. These are not abstract possibilities but functioning realities that many of us interact with daily.

1. Computer Vision: This is a domain where neural networks, particularly CNNs, have achieved superhuman performance in some areas.

- Image Recognition: Identifying objects, people, and scenes in photographs and videos. Your smartphone uses this to categorize your photos.

- Medical Imaging Analysis: The integration of AI in healthcare is assisting doctors in detecting diseases like cancer from X-rays, MRIs, and CT scans with remarkable accuracy.

- Autonomous Vehicles: AI in autonomous vehicles enables cars to "see" and interpret their surroundings in real-time, identifying pedestrians, other vehicles, traffic signs, and more. How do cars do that? By leveraging neural networks and deep learning for advanced perception and decision-making.

2. Speech Recognition: The ability of machines to understand and transcribe human speech is largely powered by RNNs and LSTMs.

- Virtual Assistants: Siri, Alexa, and Google Assistant all rely heavily on neural networks to process your voice commands.

- Dictation Software: Converting spoken words into text for documents and emails.

- Voice-Controlled Systems: Operating devices and interfaces using just your voice.

3. Natural Language Processing (NLP): This field focuses on enabling computers to understand, interpret, and generate human language. NLP use cases vary across multiple categories of devices. For instance:

- Machine Translation: Translation tools like Google Translate use sophisticated neural networks to provide translations between languages with increasing fluency.

- Sentiment Analysis: Determining the emotional tone behind the text, used by businesses to gauge customer opinions from reviews or social media.

- Chatbots and Conversational AI: Creating intelligent agents that can engage in meaningful conversations with users, providing customer support or information.

4. Recommendation Engines: The personalized suggestions you receive on platforms like Netflix, Amazon, or Spotify are often generated by neural networks.

- They analyze your past behavior (items viewed, purchased, liked) and compare it with the behavior of millions of other users to predict what you might like next. This drives engagement and sales. Such advantages are influencing the AI-powered personalization market to grow at a CAGR of 17.5% between 2024 and 2029.

5. Predictive Analytics: Mainly done to observe patterns and predict possible positive or negative outcomes. The use case is often witnessed more commonly in the fintech sector. AI-powered R&D departments are leveraging it, too, to predict sales. The health-tech industry also leverages neural networks to identify drugs. With the integration of AI in diagnostics, the implementation of neural networks in the industry has just expanded, inspiring modern healthcare business ideas.

- Fraud Detection: Identifying fraudulent credit card transactions or insurance claims by spotting unusual patterns.

- Algorithmic Trading: Making high-speed trading decisions based on market predictions.

- Credit Scoring: Assessing creditworthiness by analyzing diverse financial data.

- Drug Discovery: AI in drug discovery, powered by neural networks, is accelerating the process of identifying potential drug candidates by predicting their efficacy and side effects.

- Personalized Treatment Plans: Tailoring medical treatments based on an individual's genetic makeup and lifestyle data.

These examples merely scratch the surface. From optimizing supply chains to predicting weather patterns, the applications are as vast as human ingenuity itself.

How Do Neural Networks Work?

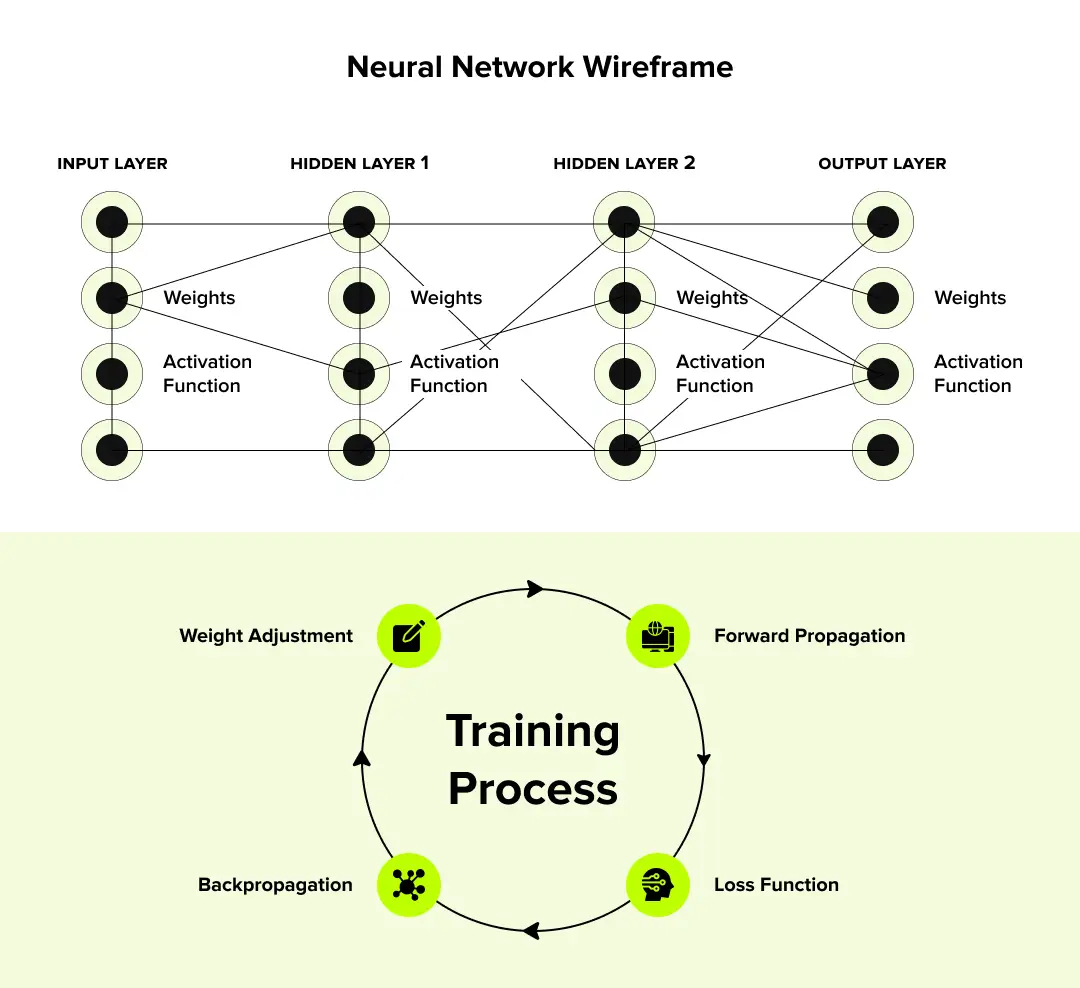

Now, let us peer into the inner workings of these fascinating constructs. While the mathematical intricacies involved in the functioning of neural networks can be profound, the fundamental concept is elegantly simple, drawing its analogy from our own brains.

The components of a neural network include:

1. The Neuron (Node): The basic unit is an artificial neuron or node. Each neuron receives one or more inputs. These inputs are numerical values. Each input is assigned a 'weight,’ which signifies its importance.

2. Weighted Sum and Activation Function: The neuron calculates a weighted sum of its inputs. This sum is then passed through an 'activation function.’ This function determines whether the neuron should be ‘activated’ or ‘fire’ and what its output signal should be. Think of it as a gatekeeper deciding if the information is significant enough to pass on. Common activation functions include Sigmoid, Tanh, and ReLU.

3. Layers: Neurons are organized into layers:

- Input Layer: Receives the initial data (e.g., the pixels of an image, the words in a sentence).

- Hidden Layers: These are the intermediate layers between the input and output. This is where the actual "thinking" or complex feature extraction happens. A network can have zero or many hidden layers. Networks with multiple hidden layers are often referred to as "deep" neural networks.

- Output Layer: Produces the final result (e.g., a classification of an image, a predicted word).

4. Training – The Learning Process: This is the most crucial part. A neural network "learns" by being trained on a large dataset. The process typically involves:

- Forward Propagation: Input data is fed into the network, and it travels through the layers, producing an output.

- Loss Function: This function measures how far the network's output is from the desired (correct) output. The goal is to minimize this "error" or "loss."

- Backpropagation: The error is then propagated backward through the network. This process calculates how much each weight in the network contributed to the error.

- Weight Adjustment (Optimization): The weights are then adjusted slightly in a direction that reduces the error. Algorithms like Gradient Descent are used for this optimization.

- Iteration: This cycle of forward propagation, loss calculation, backpropagation, and weight adjustment is repeated many times (epochs) with many examples from the training data until the network's performance reaches a satisfactory level.

It is a process of gradual refinement, much like an artist painstakingly adjusts strokes on a canvas until the desired image emerges. The network essentially teaches itself to recognize patterns and make accurate predictions by iteratively correcting its mistakes.

AI vs. ML vs. Neural Networks vs. Deep Learning

A common point of inquiry is the distinction: What is the difference between AI and neural networks? And closely related, how does Deep Learning fit into this picture? Well, the fast answer is, AI is bigger than all.

Everything, whether it's Neural Networks, ML, or Deep Learning, all of these concepts ultimately help AI models function.

Here’s a quick breakdown of how these concepts have different purposes.

| Term | Key Definition & Relationship |

|---|---|

| Artificial Intelligence (AI) | This represents the broadest field, encompassing the overarching goal of creating machines capable of performing tasks that typically require human intelligence. |

| Machine Learning (ML) | As a subfield of AI, Machine Learning focuses on developing algorithms that enable computer systems to learn directly from data without explicit programming for each task. |

| Neural Networks (NN) | These are a specific type of Machine Learning model, whose architecture is inspired by the human brain's structure, serving as one of many tools within the ML toolkit. |

| Deep Learning (DL) | This is a specialized subfield of Machine Learning that employs Neural Networks with multiple layers ("deep" architectures) to learn highly complex patterns from large datasets. |

In essence, Deep Learning utilizes Neural Networks, which are a technique within Machine Learning, which itself is a branch of Artificial Intelligence.

Wrapping Up

And so, our exploration of the Neural Network draws to a close, though its story in the annals of technology is far from over. We have journeyed from its fundamental definition, inspired by the intricate dance of our own neurons, to its diverse forms and the myriad ways it reshapes our world.

From identifying a face in a crowd to translating languages in real-time, from powering our streaming recommendations to aiding physicians in saving lives, these networks are the quiet engines of a new era.

We've seen how neural networks work, through a patient process of learning and refinement, and we've understood their place within the grander narratives of Artificial Intelligence and Machine Learning. They are not a fleeting technological fashion but a profound advancement, offering solutions and capabilities previously confined to the realm of human cognition.

As AI keeps revolutionizing the IT world, the Neural Network will undoubtedly remain a central character. Its potential is immense, its applications ever-expanding, and its impact on our lives, both seen and unseen, continues to grow. The journey of understanding and harnessing these powerful tools is one that promises continued marvels, and we shall be here to chronicle every significant chapter.

Frequently Asked Questions

-

Are neural networks supervised or unsupervised?

-

Can neural networks be used for classification?

-

How do neural networks learn from experience?

-

What neural networks does ChatGPT use?

-

What do neural networks memorize and why?

-

What are the applications of neural networks?

-

Are neural networks machine learning?