- What Exactly is an AI Detector, and Why Should You Care?

- The Core Methods Used by the AI Detector Tools

- The Million-Dollar Question: Can You Trust Them?

- It's Not Just Text: The Fight Against Deepfakes

- Who's Using This Stuff? Real-World Applications

- AI Detectors vs. Plagiarism Checkers: A Quick Takedown

- Limitations of AI Content Detectors

- Bypassing and Improving AI Detection

- The Road Ahead is a Messy One

TL;DRHow do AI detectors work? They analyze text, images, and video to judge if content is human- or AI-generated. Core methods include perplexity (predictability), burstiness (sentence rhythm), classification models, and watermark detection. AI detectors are used in academia, publishing, SEO, and recruitment to safeguard authenticity. However, no tool is 100% accurate — false positives, evolving AI models, and human-AI hybrids keep detection in a constant cat-and-mouse game. |

We're in a strange new world where your new intern, your marketing team, and even you might be using AI to write. The result? A tidal wave of content where the line between human and machine has blurred into near invisibility. This has created a booming, almost frantic, demand for a digital gatekeeper: the AI detector.

But how do AI detectors work, really? Are they digital bloodhounds or just glorified magic 8-balls? As leaders and creators, we can't afford to guess. The authenticity of our work is on the line.

We're going to pull back the curtain and show you the engine room of this critical technology.

What Exactly is an AI Detector, and Why Should You Care?

At its heart, an AI content detector is a specialized piece of software trained to find the fingerprints of artificial intelligence on a piece of content. It’s not just for text. It's for images, videos, and code. Its one and only job is to answer the question: "Was a human or a machine the primary creator here?"

Why does this matter? Trust. That's the bottom line. As we weave AI Use Cases into the fabric of our businesses, we have to ensure we're not losing our authentic voice. These tools use powerful machine learning algorithms to analyze everything from linguistic patterns to hidden metadata, spitting out a human content score that tells you how likely it is you're looking at the real deal.

The Core Methods Used by the AI Detector Tools

AI scanners aren't guessing. They are pattern-matching machines, running a series of sophisticated tests in seconds. Here are the core techniques they use to sniff out AI.

- Classification Models: This is the most common approach. Developers feed a model, the classifier, a mountain of data—millions of articles, books, and blogs written by humans, and an equally massive pile of AI-generated text. The model learns the subtle statistical differences, the "tells" of each. Your text gets compared to this training, and the classifier makes a judgment call.

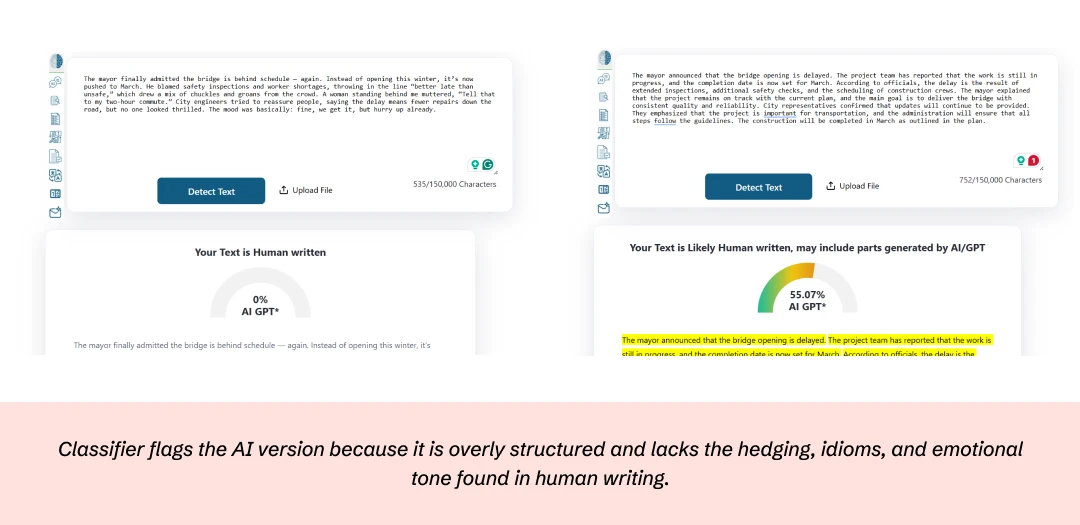

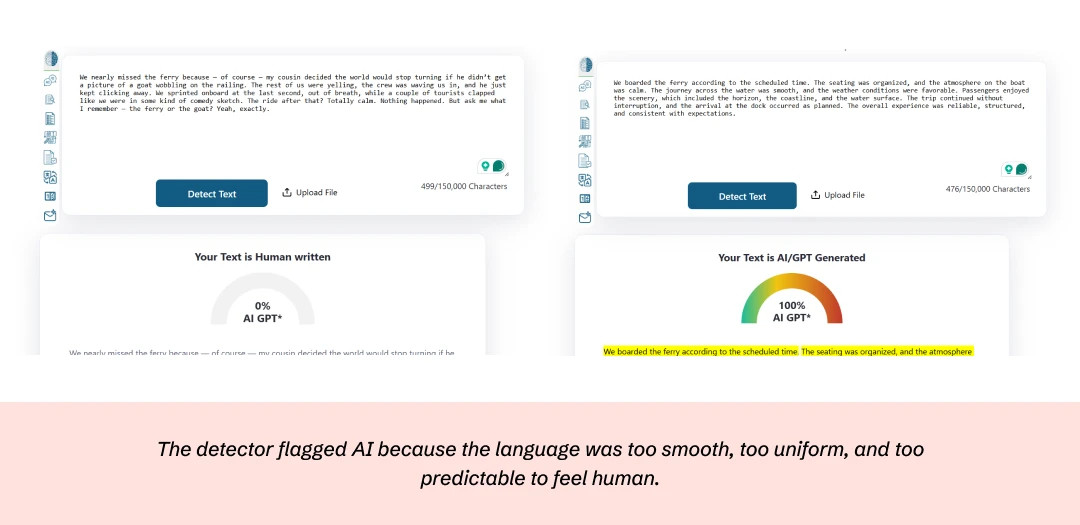

- Perplexity (The 'Surprise' Factor): Human writing is wonderfully unpredictable. We use weird idioms, change our minds mid-sentence, and generally keep things interesting. This gives our writing high perplexity. AI, on the other hand, is built to choose the most statistically probable next word. This makes its writing incredibly smooth but also... predictable. Low perplexity is a huge red flag for an AI scanner.

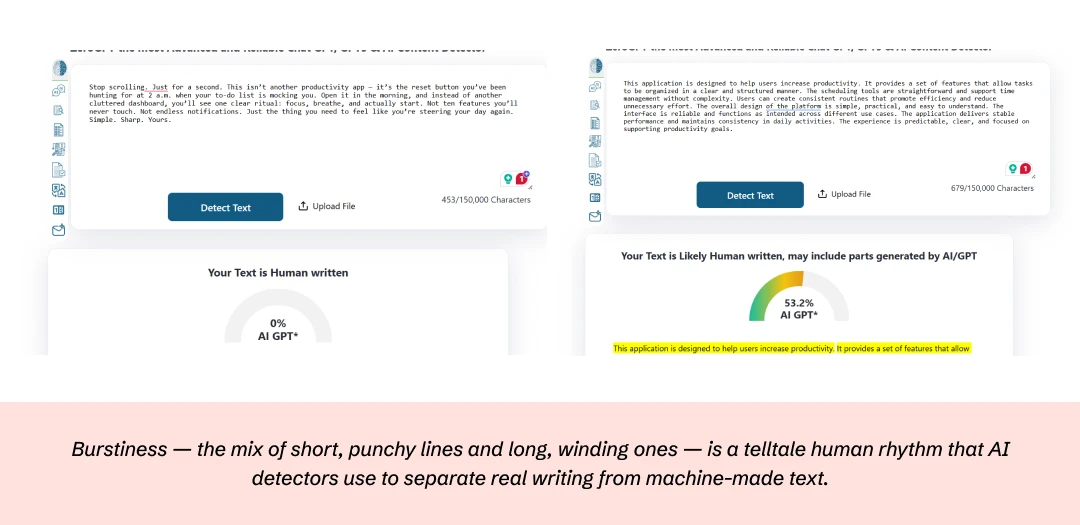

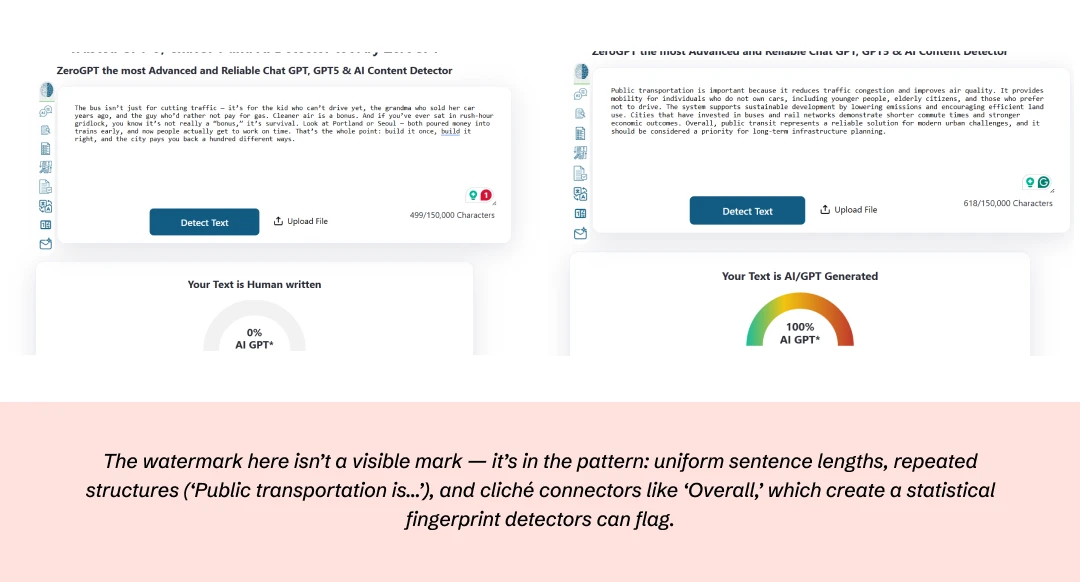

- Burstiness (The Rhythm of Writing): Pay attention to how people talk. We use short, punchy sentences. Then long, winding ones. That's burstiness. AI models often struggle with this, producing text where the sentence lengths are suspiciously uniform. It lacks that natural, human rhythm.

- Watermarking: This is the industry trying to get ahead of the problem. Some developers of Large Language Models are building in an invisible statistical signature—a watermark—into the content their tools produce. It’s a clever way to make identification definitive, but it requires a specific AI generator detector to find it.

The Million-Dollar Question: Can You Trust Them?

So, here's where it gets messy. Do AI detectors actually work? Yes, but with a giant asterisk. Think of them as a highly skilled consultant, not an infallible judge. They provide a probability, a confidence score, not a verdict.

Here’s an exact quote from “Can we trust academic AI detective? Accuracy and limitations of AI-output detectors” (Erol et al., 2026):

“Human-authored texts consistently had the lowest AI likelihood scores, while AI-generated texts exhibited significantly higher scores across all versions of ChatGPT (p<0.01). … However, none of the detectors achieved 100% reliability in distinguishing AI-generated content.”

Clearly, the reliability of any AI text detector depends heavily on a few things:

- The AI It Was Trained On: A detector trained on GPT-3 will get steamrolled by text from GPT-4o. The tech evolves so fast that detectors are in a constant game of catch-up.

- Short and Sweet is Hard to Beat: The shorter the text, the less data the detector has to work with, and the more likely it is to make a mistake.

- The Human-AI Hybrid: What happens when you use an AI to create a draft and then spend hours editing it? You get a hybrid text that can fool just about any detector out there.

It's Not Just Text: The Fight Against Deepfakes

The detection battleground has expanded well beyond essays. The rise of hyper-realistic AI-generated images and deepfakes has weaponized misinformation, making AI image and video detectors an urgent necessity.

These tools hunt for different clues. They're not looking at grammar; they're looking for pixels.

- Pixel-Level Artifacts: Tiny inconsistencies in lighting, shadows, or reflections that betray digital tampering.

- Unnatural Biology: AI can struggle with the fine details—the way hair falls, the inside of an ear, or the way a person blinks.

- Blood Flow Analysis: It sounds like sci-fi, but tools like Intel's FakeCatcher analyze the subtle, almost invisible color changes in a person's face as blood pumps under the skin. Most deepfakes don't replicate this.

Who's Using This Stuff? Real-World Applications

The adoption of AI content detectors is exploding in fields where authenticity is everything. Frankly, if your business publishes anything, you should be paying attention.

Academia: Schools and universities are on the front lines, using these tools to protect academic integrity and ensure the work students submit is their own. The goal is to keep an eye out for the content that is generated using AI copywriting tools and maintain a fair process.

Publishing & SEO: Smart publishers and marketers are using detectors to vet content, ensuring it’s original and won't get flagged by Google as low-value, AI-generated spam. It's a new, crucial layer in any serious AI in SEO playbook.

Newsrooms: In the war against fake news, journalists need tools for media authentication to verify sources and debunk manipulated images or videos.

Recruitment: Some savvy recruiters now run cover letters through an AI writing checker to get a sense of a candidate's true communication style.

AI Detectors vs. Plagiarism Checkers: A Quick Takedown

People often lump these two together, but they do completely different jobs. It’s simple:

- A plagiarism checker asks: "Was this copied?" It checks for content originality by comparing text against a huge database of what's already out there.

- An AI detector asks: "Who wrote this?" AI detector tools analyze the intrinsic properties of the writing itself to determine if the author was a human or a machine.

| Feature | Plagiarism Checker | AI Detector |

|---|---|---|

| Primary Goal | Finds copied content. | Identifies AI-generated content. |

| Method | Compares text to a massive database. | Analyzes linguistic patterns (perplexity, etc.). |

| Output | Similarity score with source links. | Probability score of AI origin. |

| Example Tool | Turnitin, Copyscape | ZeroGPT, Copyleaks |

A piece of text can be 100% unique and pass a plagiarism check with flying colors, but when you check its AI content, it can still be 100% flagged by even free AI checkers.

Limitations of AI Content Detectors

AI detectors are far from foolproof. Research, including the 2026 PMC study on neurosurgical abstracts, shows they misclassify both ways: genuine work flagged as AI (false positives) and machine-generated text slipping through as human (false negatives). Here’s where the cracks show:

Bias in detection: Non-native English writers face a higher risk of being mislabeled, since simpler phrasing and restricted vocabulary can resemble AI’s statistical smoothness.

- Perplexity and burstiness reliance: Detectors lean heavily on these markers of unpredictability and rhythm, but evolving AI models are now trained to imitate messy human patterns, blurring the distinction.

- Lexical features and syntactic complexity: Current tools analyze word choice and sentence structure, yet highly structured human writing may look “too AI,” while informal AI text may pass as “human.”

- Mixed AI–human editing: A draft created by ChatGPT and lightly revised by a person often dodges detection, exposing the limits of style-based analysis.

- Short text analysis limitations: Brief passages, like emails or tweets, rarely give detectors enough linguistic material to make reliable judgments.

- Writing style variability: Humans don’t write alike — meticulous, formulaic authors may get flagged, while chaotic AI-crafted text could slip under the radar.

- Unreliability of AI detector tools: Even with ROC scores in the 0.9+ range, as reported in the PMC study, no detector achieved 100% reliability. Results differ by tool, dataset, and context.

- Difficulty detecting advanced AI models: As newer models increase sophistication in syntactic complexity and deliberate “humanization,” they outpace detectors designed for older outputs.

- The AI Humanizer loophole: Tools that rewrite AI text specifically to evade detectors act as cloaking devices, undermining detection efforts entirely.

The result is an endless cat-and-mouse game detectors adapt, models evolve, and certainty remains out of reach.

Bypassing and Improving AI Detection

AI detection is an endless cat-and-mouse game. While detectors evolve, so do the tactics used to bypass them.

- Bypassing tactics: Writers often turn to AI text humanizer tools such as Surfer’s Humanizer, Undetectable.ai, or Winston AI. These services aim at humanizing AI text by altering lexical features, syntactic complexity, and rhythm. Other common tricks include inserting spelling mistakes, adding illogical word choices, or using mixed AI–human editing to confuse detectors.

- Why detectors struggle: Advanced systems show difficulty detecting advanced AI models, especially when content has high perplexity and burstiness baked in. Short posts suffer from short text analysis limitations, and writing style variability makes false positives more likely.

- Improving detection: The best defense is redundancy. Using multiple AI detection tools together, applying anti-AI features, and adopting hybrid models that combine style checks with factual verification improve reliability. Tools also benefit from content improvement suggestions and integration with high-quality AI writing tools that encourage transparency over concealment.

The reality: false positives and false negatives will always exist, making the unreliability of AI detector tools part of the landscape. The focus should be on balanced, transparent use rather than blind reliance.

The Road Ahead is a Messy One

So, how do AI detectors work? They work by making an educated guess based on mountains of data. They are a fascinating, necessary, and deeply flawed technology.

For those of us in leadership positions, ignoring them isn't an option. Understanding their capabilities and their limitations is now a core part of digital literacy. The goal isn't to catch people; it's to preserve authenticity in a world where it's becoming a scarce resource. Developing more user-friendly AI products is a great step, but for now, these detectors are the watchdogs we have. Use them wisely.

Frequently Asked Questions

-

What is AI detection in simple terms?

-

Can AI detectors be wrong?

-

Is there a truly free AI detector that works?

-

What do AI detectors look for, exactly?