-

Claude vs Google Gemini vs ChatGPT: A Comparative Glance at Their Key Features

- Round 1: Reasoning and Complex Problem-Solving

- Round 2: SEO, Creativity, and Content Generation

- Round 3: Testing Deep Research & Summarisation

- Round 4: Coding and Data Analysis

- Round 5: AI Image & Video Generation Capabilities

- Round 6: Who's the Better Conversationalist?

- Round 7: Pricing Pans

- Round 8: Design, Speed, and Usability

- ChatGPT vs Gemini vs Claude: The Final Verdict!

The age of AI is in full swing, yet for many, it’s defined by a single, frustrating question: which tool can we actually trust? According to a Tidio survey, 72% of users trust AI to provide reliable and truthful information, an even larger majority—a staggering 75% of that same group—admit to being misled by it at least once. Plus, as large as 86% of surveyed people have personally faced AI hallucination, at least once.

This statistic highlights the core pain point for users today, the gap between AI’s promise and its unreliable reality. You need an AI that can flawlessly debug code, another that can draft nuanced creative copy, and one that can reason through complex data without errors. But switching between them is a gamble.

It's time to replace that gamble with a verdict. This isn't just another overview; it’s a head-to-head feature battle. We are comparing large language models like Claude AI vs ChatGPT vs Google Gemini on different use cases that matter most to determine which AI champion you should choose.

Note: This blog will share a comprehensive comparison of the latest models for Claude (Opus 4.1), ChatGPT (GPT-5), and Gemini (2.5 Pro).

Claude vs Google Gemini vs ChatGPT: A Comparative Glance at Their Key Features

LLMs are gaining huge popularity. Ratnakar Pandey, Chief Data Scientist and AI Consultant, in a conversation with MobileAppDaily, states that,

“Everybody is talking about ChatGPT. Even people who don't have anything to do with AI are also using it. The kids are using it for their homework. This is high time that you know people are actually getting involved in AI.”

This growing popularity goes beyond their primary functions and pricing. That’s because AI powerhouses like Claude, Gemini, and ChatGPT offer a range of features that truly shape the user and developer experience. Let's take a quick look at some of those crucial "other" capabilities that round out their offerings.

| Feature Category | ChatGPT (OpenAI) | Gemini (Google) | Claude (Anthropic) |

|---|---|---|---|

| Multimodality (Input/Output) | Strong (Text, Image, Voice Input; Text, DALL-E Images, Voice Output via integration) | Very Strong (Native Text, Image, Voice, Video Input; Text, Image, Voice Output) | Text-focused, strong Image Analysis (Input); Text Output. Limited direct generation. |

| Context Window Size | Large (e.g., 128k-200k tokens for latest models via Plus/API) | Very Large (e.g., 1M+ tokens for many models, pushing to 2M) | Large (e.g., 200k tokens for latest models) |

| Real-time Data Access | Yes (via Web Browse/Plugins) | Yes (Deep integration with Google Search & ecosystem) | No (Focus on internal knowledge/provided docs, no native web access) |

| Tool Use / Function Calling | Extensive (Plugins, Custom GPTs, Code Interpreter, "Work with Apps" desktop) | Advanced (Native function calling, strong Google Workspace/Cloud integration) | Robust (via "Computer Use" or Model Context Protocol for external tools) |

| Safety & Ethics Focus | Strong (Alignment research, content moderation, configurable enterprise guardrails) | Very Strong (Prioritizes safety, factual grounding, responsible AI principles) | Extremely Strong (Constitutional AI, strict safety alignment, ethical reasoning focus) |

| Customization / Fine-tuning | Yes (Fine-tuning API, Custom GPTs for user-level customization) | Yes (Extensive fine-tuning via Vertex AI for developers, custom instructions) | Yes (Fine-tuning API for developers, custom instructions, "Projects") |

| Ecosystem Integration | Broad (Microsoft Copilot, extensive plugin market, API integrations) | Deep (Seamless with Google Workspace, Android, Google Cloud) | Developer-focused API, growing enterprise integration |

| Mobile App Availability | Yes (iOS, Android) | Yes (iOS, Android, deeply integrated into Pixel devices) | Yes (Web/Desktop apps, API primarily) |

| Usage Limit for Latest Models | 10 requests per 5 hours. Switches to GPT-5 mini after limit | 5 requests per minute, 25 per day | No free version available |

Round 1: Reasoning and Complex Problem-Solving

Let's cut through the hype around the best AI models. The real test of any AI isn't just speed, but whether you can trust its thinking. 20% or less of gen-AI-produced content is checked before use. Therefore, it’s important that the answers given by AI are just right, so that it’s not flagged later. We wanted to know who provides a thoughtful solution. We gave them the same prompt:

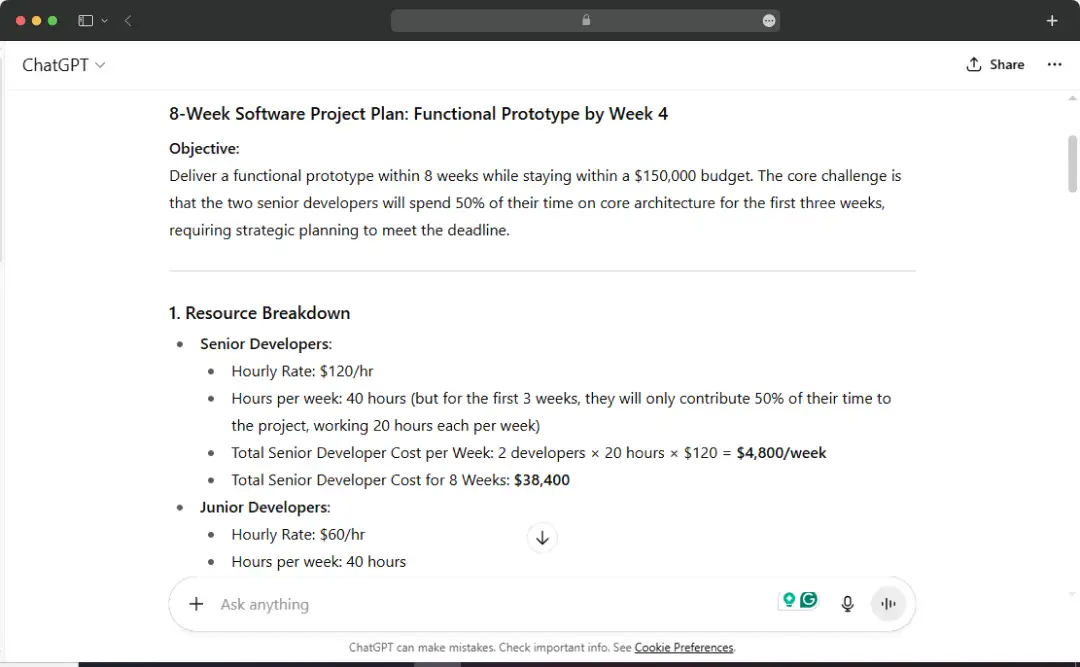

Act as a senior project manager in Austin, Texas. Your task is to plan an 8-week software project with a strict $150,000 budget. You have a team of two senior developers (at $120/hr) and four junior developers (at $60/hr). Here's the core challenge: you must deliver a functional prototype by week 4, even though your two senior devs are required to spend half their time on core architecture for the first three weeks. Create a weekly project plan with a resource and budget breakdown, and justify how it meets the deadline while staying within budget.

1. ChatGPT: The Confident Sprinter

ChatGPT was blazing fast, delivering beautifully structured answers in seconds. But its speed came at a cost. On the logic puzzle, it missed a key constraint, leading to a flawed result. It was a classic case of confidence over competence, offering a slick answer without showing its work.

2. Gemini: The Overthinking Powerhouse

Google’s Gemini felt like a powerhouse, getting the logic puzzle right but burying the explanation in a dense wall of text. Its analysis was incredibly comprehensive and structured, but often overwhelming. It almost felt ike it gave too much content to comprehend further.

3. Claude: The Deliberate Thinker

Then came Claude. It was the only AI to verbalize its chain of thought, breaking down the problem step-by-step and actively acknowledging the trade-offs. It demonstrated a more deliberate and transparent thought process.

Round 1 Verdict: Claude

While the others gave answers, Claude demonstrated true reasoning. It’s not just about the correct output; it’s about trusting the process. Claude’s transparent approach feels like a dependable colleague, making it the clear winner for tasks that require deep, trustworthy logic.

Round 2: SEO, Creativity, and Content Generation

Generative AI isn't just generating text; it's about stirring genuine creativity and producing content that connects on a human level and ranks for AI overviews and search engines. It’s about the art, the nuance, the sheer humanity in its content generation. We were hunting for the AI that could truly break free from its digital chains and surprise us.

We gave it a prompt: Create a 500-word blog post on the topic 'The Future of AI in Content Generation,' focusing on how generative AI is revolutionizing SEO, creativity, and human-level content generation. The post should weave together a balance of technical insight and creative storytelling, ensuring the content is SEO-optimized with relevant keywords.

The tone should be conversational yet insightful, with an emphasis on how AI transcends traditional text generation to produce content that resonates with readers while also ranking well on search engines.

1. ChatGPT: The Confident Auto-Pilot

As soon as we gave ChatGPT prompts, it gave me three narratives, all within parameters, perfectly structured. On the surface? Impressive. But dig a little, and you sense an underlying predictability. The content was mostly generic. Well, it felt more like a-worn encyclopedia entry. It delivered, absolutely. Yet, it was the delivery of a highly efficient machine, confident but often missing the creative imagination.

2. Gemini: The Dynamic Alchemist

Gemini’s response struck a fine balance between creativity and technical accuracy. The content was expertly SEO-optimized, including keywords naturally without overstuffing, and conveyed a good understanding of generative AI’s role in content creation. The human-like quality of the writing was strong, with well-placed anecdotes and engaging metaphors. Overall, Gemini's response showed great potential for both technical SEO alignment and creative expression.

3. Claude: The Thoughtful Wordsmith

Claude delivered a thoughtful and well-rounded blog post that showed a solid grasp of the topic. The content was clear, concise, and SEO-friendly, with well-placed keywords and relevant subheadings. The narrative flowed naturally, with moments of creative expression.

However, the writing leaned slightly more creative and less informative. While the blog was engaging and technically sound, the depth of human-like nuance was slightly lacking. It didn’t push the boundaries of what we’d expect from an AI capable of generating unique, artistic content.

Round 2 Verdict: Gemini

While Claude’s empathetic depth was convincing, and ChatGPT offered undeniable efficiency, Gemini takes this round for SEO, Creativity, and Content Generation. Why? Because it exhibited the most versatile and vivid imaginative range. It’s well researched, SEO-optimized, structured well in H2s and H3s, and has just the right creativity poured in.

Round 3: Testing Deep Research & Summarisation

In today's data deluge, the best AI models don't just pull facts; they understand, synthesize, and distill complex information into something genuinely usable. It was about deep investigative prowess and the sharp art of concise, insightful summarization. We tested it with the prompt:

Research the recent global impacts of quantum computing advancements, identifying key economic shifts, ethical debates, and potential societal benefits. Summarize your findings into a concise, actionable briefing for a non-technical business executive.

1. ChatGPT: The Broad-Stroked Synthesizer

ChatGPT was quick, as expected. It offered a comprehensive overview of quantum computing's impacts, hitting all the requested areas. While easy to follow, the depth felt a bit thin. Nuances in economic shifts or ethical debates often got lost in its broad summarization. Most importantly, it gave me the responses, which were taken from credible sites like Boston Consulting Group, Financial Times, and The Wall Street Journal. Most of its data was accurate, and responses were without hallucinations.

2. Gemini: The Analytical Deep Diver

Google’s Gemini truly impressed. It performed a genuine deep dive, adeptly cross-referencing information and identifying subtle connections. Its summarization then presented these complex interdependencies with striking clarity and conciseness. The briefing was not just accurate but actionable, highlighting critical takeaways without overwhelming. It felt like a sharp analyst who grasped the real implications. However, it did not share its source of information; henceforth, the responses might just be framed.

3. Claude: The Contextual Curator

Claude excelled at understanding the specific subtleties of the prompt, especially the ‘non-technical executive’ audience. Its research was well-organized and transparently cited. It took data from Quantum Science and Tech Research. For summarization, Claude skillfully preserved essential context and complexity while making information digestible. It simplified without oversimplifying, offering carefully curated summaries. A measured, meticulous approach that built trust.

Round 3 Verdict: ChatGPT

ChatGPT and Claude almost had a head-to-head battle. Claude takes the win for Deep Research and Summarization. While Claude's curation was strong, ChatGPT offered breadth. But ChatGPT’s ability to perform genuinely deep research and then synthesize that complexity into truly concise, actionable insights for a specific audience was paramount. It didn't just find information; it processed, understood, and reframed it to add real value without hallucinating.

Round 4: Coding and Data Analysis

When it comes to choosing the best AI model for coding and crunching numbers, it's about writing clean, functional code, debugging issues, and extracting genuine insights. This round pushed models beyond simple generation. We gave it a prompt:

Given a CSV file of customer transaction data (columns: transaction_id, customer_id, amount, product_category, timestamp), write Python code to identify the top 5 product categories by total sales and visualize monthly sales trends using Matplotlib. Also, explain any assumptions made about the data structure.

1. ChatGPT: The Boilerplate Specialist

ChatGPT was fast, providing functional Python code. However, its output often lacked elegance or best practices. Explanations about data assumptions or the why behind its choices felt generic, almost an afterthought. It offered a solid start, but rarely the refined solution or deep insight needed for production-ready work.

2. Gemini: The Data-Savvy Architect

Gemini approached the problem holistically. Its Python code was well-structured and efficient. It immediately made intelligent assumptions about data cleanliness and explained why they mattered.

When visualizing, it often offered insights into what trends implied, going beyond mere description. It demonstrated an integrated understanding from raw data to actionable visualization, acting as a true data-savvy architect.

3. Claude: The Debugging Collaborator

Claude AI showed a thoughtful approach. Its strength lies in reasoning through the problem, often providing clear, step-by-step logic explanations. If errors existed in the given simulated data, Claude was frequently the first to gently point them out and offer corrections. For complex coding, Claude felt like a truly valuable, collaborative engineer.

Round 4 Verdict: Gemini

Gemini takes the lead for Coding and Data Analysis. While ChatGPT offers speed and Claude excels at debugging and clear reasoning, Gemini consistently delivers the most well-rounded package. It combined accurate code generation with a sharp understanding of data interpretation, offering insightful explanations and anticipating real-world data challenges. You can use Gemini to code your next project.

Round 5: AI Image & Video Generation Capabilities

The magic of AI conjuring visuals from words is clear. But the real test isn't just generating an image; it's about artistic consistency, nuanced interpretation, and evoking genuine feeling. This round challenged their ability to create art, maintain style, and push visual storytelling.

We wanted to see who could truly paint with code. It’s astounding to think that over 34 million AI-generated images are now created daily. The prompt we used challenged their creative vision:

Generate three distinct images: 1) A cyberpunk alleyway at night, with neon reflections and a lone figure. 2) A fantastical, overgrown ancient ruin consumed by bioluminescent jungle. 3) An abstract representation of 'digital consciousness' using light and geometric forms. Emphasize unique visual style and atmospheric detail in each.

1. ChatGPT: The Prolific and Adaptable Creator

ChatGPT, with its integrated visual models, was incredibly prolific. It churned out images for each concept swiftly, consistently adhering to themes. Its strength lies in reliably generating usable and recognizable visuals across a broad range of styles without fuss.

For high-volume or diverse creative needs, its consistent, rapid output made it exceptionally practical. It's the dependable workhorse for broad visual tasks.

2. Gemini: The Visionary Artist

Google’s Gemini proved visionary. Its interpretations were remarkably imaginative and visually striking, digging deep into nuances. The cyberpunk alley pulsated with atmosphere; the jungle felt alive. Gemini delivered images with rich atmosphere and a unique artistic style. It felt like collaborating with a true artist who saw the vision.

3. Claude: The Conceptualizer

Claude, notably, doesn't directly generate images like the others. Its strength is a profound understanding and detailed description of visual concepts. It could refine your prompt, suggesting impactful visual elements. While it couldn't paint the picture, it was an invaluable conceptualizer, a master at articulating the vision for a visual piece.

Round 5 Verdict: ChatGPT

For AI Image & Video Generation Capabilities, ChatGPT models take the win for their sheer breadth, speed, and consistent ability to deliver usable visual assets across wildly different themes. While Gemini often produced images with profound artistic depth, and Claude excelled at conceptualizing, ChatGPT's unparalleled reliability and efficiency in generating diverse visual output make it indispensable for creators needing versatile and rapid visual content. You can use ChatGPT to make your next image and video shine.

Also Read: ChatGPT Alternatives

Round 6: Who's the Better Conversationalist?

Beyond tasks, an AI's ultimate test is pure conversation. Can it truly chat? It’s about natural, fluid dialogue, understanding subtle cues, holding context, and navigating ambiguity with grace. A great conversationalist makes you forget you're talking to a machine. This round explored open-ended discussions, where personality and empathetic understanding truly mattered.

1. ChatGPT: The Knowledgeable Lecturer

ChatGPT engaged with its characteristic clarity, structuring the discussion well. Responses were informative, covering broad philosophical points. However, follow-up questions often felt predictable, designed to solicit more information rather than deeply probe nuances. It operated like a competent academic, keeping the discussion on track but sometimes lacking spontaneous, human-like detours.

2. Gemini: The Expansive Explorer

Google's Gemini proved a remarkably expansive conversational partner. It often connected disparate ideas with impressive fluidity, enriching the discussion with broad knowledge. Its contextual understanding was strong. Gemini frequently offered thought-provoking analogies, pushing the conversation into novel territories. While detailed, its depth often led to a richer exploration of the philosophical landscape.

3. Claude: The Reflective Prober

Claude approached this profound discussion with notable thoughtfulness and a deeply reflective style. Its responses demonstrated keen awareness of the topic's sensitivities. Claude excelled at asking precisely targeted follow-up questions that genuinely probed deeper into nuances, often rephrasing for clarity. The dialogue felt unhurried, patient, and remarkably considerate, fostering introspection.

Round 6 Verdict: Claude

For Who's the Better Conversationalist, Claude narrowly takes the win. While ChatGPT offers structure and Gemini provides expansive exploration, Claude's superior ability to engage in a truly reflective, empathetic, and patient dialogue, coupled with its precisely probing follow-up questions, made the interaction feel the most genuinely human. It fostered a sense of shared inquiry that was truly exceptional.

Round 7: Pricing Pans

This round cuts straight to the economics, evaluating accessibility, scalability, and overall value. Understanding pricing structures is crucial, as even the smartest AI needs to be sustainable for your budget.

| Tier | ChatGPT | Gemini | Claude |

|---|---|---|---|

| Free | 10 requests/5 hours + 1 thinking message/day (For ChatGPT 5) | 5 requests/min, 25/day + Limited access (For Gemini 2.5 Pro) | No free tier available (For Claude Opus 4.1) |

| Paid Plans | Begins at $20/month | Begins at $19.99/month | Begins at $20/month or $17/month (billed annually) |

| API Pricing | Pay-per-token for ChatGPT 5: Starts at $1.25 input / 1M tokens, $10.00 / 1M tokens output | Pay-per-token for Gemini 2.5 Pro: Starts at $1.25 per 1M tokens input, $10.00 per 1M tokens output | Pay-per-token for Claude Opus: $15.00 per 1M tokens input, $75.00 per 1M tokens output |

General Observations:

These AI giants generally offer similar tiered approaches: a free entry point, then individual and team options. The real value lies in what you get at each level. OpenAI’s ChatGPT 5emphasizes its broad ecosystem and user-friendly interface.

Google’s Gemini 2.5 Pro leans into deep integration with its extensive product suite and powerful multimodal capabilities. Anthropic’s Claude Opus 4.1 focuses on extensive context windows and nuanced ethical handling. Each targets a distinct need, making choice dependent on specific budget and requirements, not a universally affordable option.

Round 8: Design, Speed, and Usability

This round isn't just about what an AI can do, but how it does it. We’re talking about the complete user experience: its interface design, response speed, and overall ease of use. Can you get what you need quickly, without wrestling with an overly complex system? Is it intuitive, reliable, and a genuinely helpful partner in your workflow? This crucible tests more than just intelligence; it tests convenience, efficiency, and thoughtful design.

1. ChatGPT: The Clean Workhorse

ChatGPT models consistently offer a clean, straightforward approach to user interaction. Its responses are typically logical, following common patterns of expectation. When it comes to speed, it's a remarkably quick responder, generating ideas or executing tasks with minimal delay. However, its overall "design philosophy" sometimes feels a bit generic, like a reliable template. It is perfectly usable and highly efficient for everyday tasks.

2. Gemini: The Feature-Rich Innovator

Google’s Gemini frequently presents more elaborate and feature-rich interactions and capabilities. It often incorporates advanced AI functionalities seamlessly into its proposed workflows, suggesting dynamic adjustments or intelligent system behaviors. Its interface with the user, whether through text or integrated tools, leans towards modern, interactive components, showcasing potential for innovative user experiences. Response speed is generally excellent, especially when tackling complex, multi-faceted requests.

3. Claude: The Thoughtful Clarifier

Claude approaches user interaction with notable thoughtfulness, prioritizing clarity and comprehensive understanding. Its responses often emphasize transparent explanations and user control, crucial for building trust. While not always the absolute fastest, its output feels meticulously considered, often verbalizing its thought process. It builds a robust user journey, valuing user confidence above all.

Round 8 Verdict: Gemini

For Design, Speed, and Usability, Gemini takes the win. While ChatGPT offers reliable simplicity and Claude excels at thoughtful considerations, Gemini consistently balances all three elements most effectively. Its ability to generate feature-rich, innovative solutions and integrate complex AI capabilities smoothly, all delivered with excellent speed, gave it the edge for a seamless user experience.

Key Performance Comparison

Here’s a quick comparison of different parameters for ChatGPT, Gemini, and Caude.

| Parameters | ChatGPT | Gemini | Claude |

|---|---|---|---|

| Hallucination Rate | 1.4 | 1.1% | 4.8% |

| Factual Consistency Rate | 98.6% | 98.9% | 95.2% |

| Answer Rate | 99.3% | 95.9% | 100.0% |

| Average Summary Length (Words) | 96.4 | 72.1 | 110 |

| Real-World Coding | 74.9% on SWE-bench Verified, 88% on Aider Polyglot | 74.4% on HumanEval and 74.9% Natural2Code | 72.5% on SWE-bench Verified |

| Multimodal Understanding (MMU) | 84.2% on MMMU | 83.6% on Big Bench Hard | 76.5% on MMMU (validation) |

Source: Huggingface, OpenAI, Anthropic, and Google

ChatGPT vs Gemini vs Claude: The Final Verdict!

So, after this deep dive into Google Gemini vs Claude AI vs ChatGPT, it’s clear: no single AI reigns supreme across every single task. Each model—Claude Opus 4.1, ChatGPT 5, and Gemini 2.5—brings unique strengths, whether it’s Claude's thoughtful reasoning, ChatGPT's broad adaptability, or Gemini's integrated power.

The future is collaboration; it’s about understanding their individual superpowers and strategically leveraging them. In the ongoing ChatGPT vs Google Gemini vs Claude AI debate, the "best" AI is the one you smartly integrate into your workflow, combining its diverse capabilities to elevate your projects to new heights.

Frequently Asked Questions

-

Is Claude better than ChatGPT for specific writing tasks, or vice-versa?

-

Which AI is better than ChatGPT if I need deep research and real-time information access?

-

Is ChatGPT the best AI overall, or do others excel in specific areas?

-

Which AI is generally considered the best AI for programming and coding?

-

Beyond general tasks, which AI is best for programming, particularly when dealing with intricate problems?

Uncover executable insights, extensive research, and expert opinions in one place.