OpenAI Launches o3 and o4-mini, Pushing AI Reasoning Into a New Era

Date: April 17, 2025

Models built for visual reasoning and STEM tasks are now available to developers and ChatGPT users.

OpenAI is raising the bar once again. The company behind ChatGPT has released two new AI models — o3 and o4-mini — designed to take on one of AI’s biggest frontiers: reasoning.

The announcement, made Wednesday, signals OpenAI’s focus on building models that can think more like humans — not just through text, but with visuals too. These models aren’t just about chatting anymore; they’re built to analyze diagrams, interpret whiteboard sketches, and tackle complex STEM problems with improved accuracy.

Strong Models With Strong Potential

Both models are made to surpass their predecessors especially in areas like coding. In fact, OpenAI wrote in its official blog post:

“OpenAI o3 is our most powerful reasoning model that pushes the frontier across coding, math, science, visual perception, and more. It sets a new SOTA on benchmarks including Codeforces, SWE-bench (without building a custom model-specific scaffold), and MMMU.”

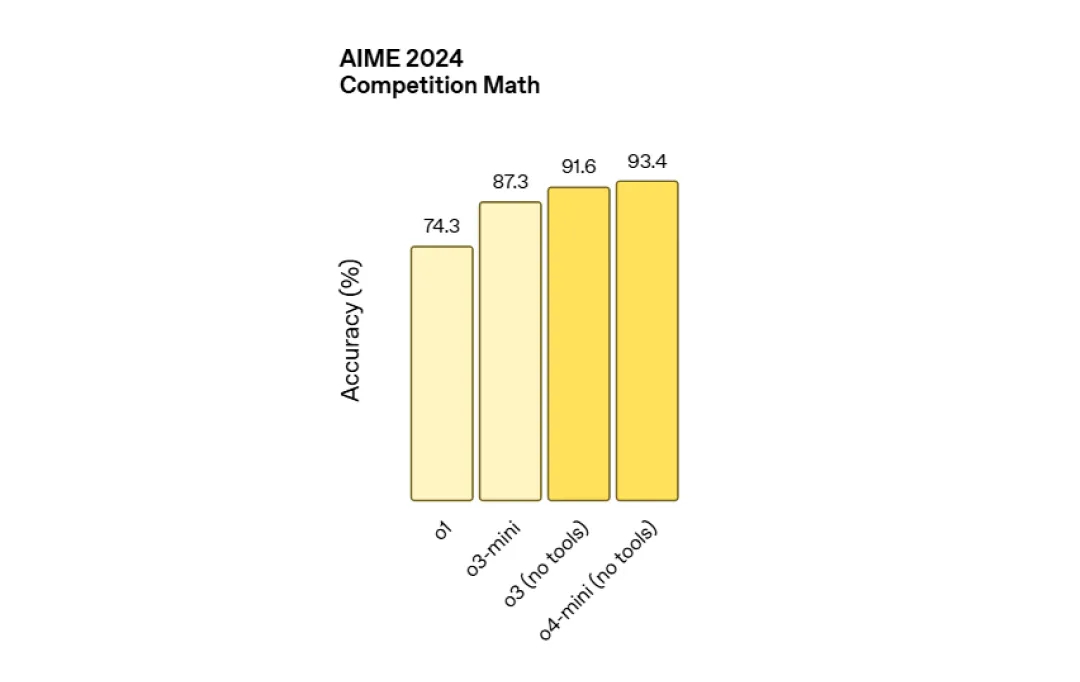

And the numbers back that up. In internal evaluations, o3 scored a stunning 91.6% on the American Invitational Mathematics Exam (AIME) and cleared the human-level benchmark on the ARC-AGI test — a notable milestone in machine reasoning.

Its lighter sibling, o4-mini, is no slouch either. Optimized for efficiency, o4-mini performs well on tasks like coding, math, and logic — but with a smaller computational footprint. It’s now powering ChatGPT for free-tier users, while o3 is available for those on Plus, Team, and Enterprise plans. Developers can also access both models via OpenAI’s API.

What sets these models apart? It’s not just the raw intelligence — it’s how they reason. Both o3 and o4-mini can now interpret and manipulate images as part of the reasoning process. That means they can zoom into visuals, rotate diagrams, and analyze figures as if they were part of the conversation. It’s a step toward a more multimodal AI experience — one that understands not just what we say, but what we show.

This launch also marks a strategic shift. Older models like gpt-3.5-turbo-instruct are being phased out, as OpenAI consolidates its offerings around more capable and efficient architectures.

Behind the scenes, the models underwent rigorous safety evaluations, including OpenAI’s updated “preparedness framework” meant to ensure advanced AI tools are deployed responsibly. It’s a reminder that while the models are getting smarter, the stakes are also getting higher.

With the release of o3 and o4-mini, OpenAI isn’t just improving performance — it’s redefining what AI can do. These models point toward a future where machine intelligence isn’t just fast or fluent, but capable of truly understanding complex problems across text and imagery.

By Arpit Dubey

Arpit is a dreamer, wanderer, and tech nerd who loves to jot down tech musings and updates. With a knack for crafting compelling narratives, Arpit has a sharp specialization in everything: from Predictive Analytics to Game Development, along with artificial intelligence (AI), Cloud Computing, IoT, and let’s not forget SaaS, healthcare, and more. Arpit crafts content that’s as strategic as it is compelling. With a Logician's mind, he is always chasing sunrises and tech advancements while secretly preparing for the robot uprising.

// Recommended

Pinterest Follows Amazon in Layoffs Trend, Shares Fall by 9%

AI-driven restructuring fuels Pinterest layoffs, mirroring Amazon’s strategy, as investors react sharply and question short-term growth and advertising momentum.

Clawdbot Rebrands to "Moltbot" After Anthropic Trademark Pressure: The Viral AI Agent That’s Selling Mac Minis

Clawdbot is now Moltbot. The open-source AI agent was renamed after Anthropic cited trademark concerns regarding its similarity to their Claude models.

Amazon Bungles 'Project Dawn' Layoff Launch With Premature Internal Email Leak

"Project Dawn" leaks trigger widespread panic as an accidental email leaves thousands of Amazon employees bracing for a corporate cull.

OpenAI Launches Prism, an AI-Native Workspace to Shake Up Scientific Research

Prism transforms the scientific workflow by automating LaTeX, citing literature, and turning raw research into publication-ready papers with GPT-5.2 precision.

Have newsworthy information in tech we can share with our community?