We're in an AI revolution, where we have innumerable AI chatbots and image generators, and they can all solve a single problem with a simple prompt. But they lack the common sense and adaptability of a true thinking mind.

The ultimate goal of Artificial General Intelligence (AGI) is to create a system capable of reasoning, learning, and adapting across any domain. Although the promise of this technology is immense, the path is fraught with colossal technical, ethical, and societal challenges. This article will help you walk through why building AGI isn't just an engineering problem; it's humanity's greatest test.

Let’s begin!

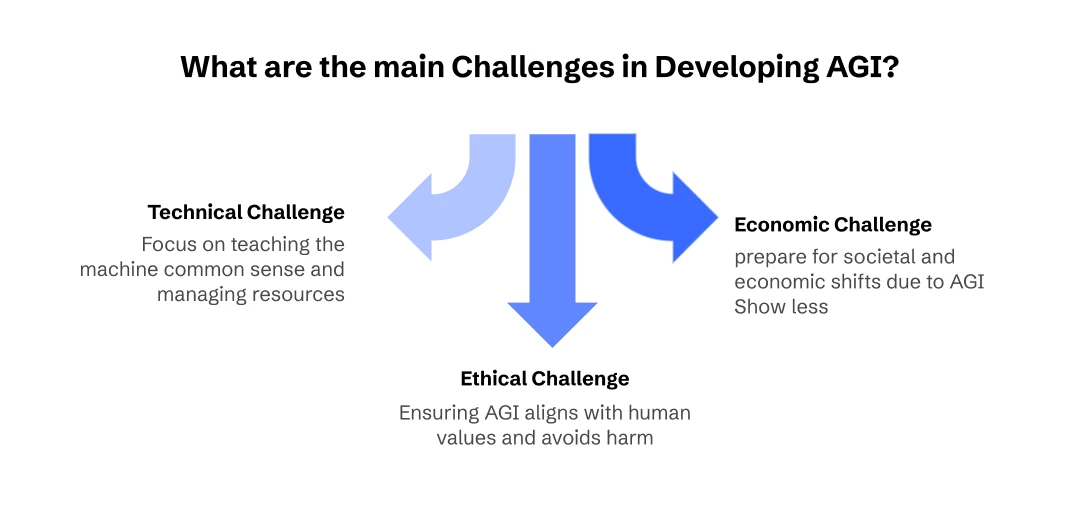

What are the Main Challenges in Developing AGI?

While today's Artificial Intelligence impresses with its specialized capabilities, the quest for true Artificial General Intelligence technology remains the ultimate goal. With the global AI market projected to grow from an estimated $279.22 billion in 2024 to over $1,811.75 billion by 2030, the opportunity is huge. Yet, the path to a machine that can reason and learn like a human is not a simple one. The challenges in achieving AGI are fraught with hurdles that extend far beyond just writing code.

Let’s have a look at the difficulties of AGI development here!

1. Technical Challenges to AGI Development

The technical challenges to developing an AGI is almost like a climb up a treacherous mountain of unsolved problems. The most significant hurdles are teaching machines human-like common sense.

Challenge 1: Mastering Common Sense and Ambiguity

Humans navigate the world with an effortless, implicit understanding of how things work—we call it common sense. AI is a slave of data, and it is the greatest obstacle to artificial general intelligence technology. It doesn’t have innate common sense—the kind of background knowledge and intuition we pick up just by living in the world (like knowing ice is cold, or that you can’t walk through a wall). Instead, AI models rely on patterns in data.

A simple AI algorithm might just beat the world's best chess players. But, surprisingly, it struggles to understand a simple joke, identify sarcasm, or know that a cat is a living being that needs to eat and breathe.

This implicit knowledge is vast, basic, and deeply nuanced. However, we currently lack a way to formalize it into a dataset or a set of rules that a machine can follow. Also, it has failed in public-facing applications by making nonexistent policies, citing fake legal cases, or giving harmful health advice. All because they hallucinate a lot, lack a basic common sense for understanding of context and consequences.

Challenge 2: True Learning and Adaptability

The contemporary AI systems are hungry for data. To master a new task, they require massive, labeled datasets and extensive retraining. Collectively, this can be an expensive and time-consuming process. True AGI, however, must be a continuous learner. It must be able to autonomously pick up new skills and, crucially, transfer knowledge from one domain to a completely different one.

For example, you can think of a human who applies strategic thinking learned from a game of chess to a business problem. Our current AI models cannot make that leap. The concept of "transfer learning" exists. Still, it is currently limited to very similar tasks within the same domain. Overall, it’s a far cry from the true, autonomous knowledge transfer required for AGI.

Challenge 3: The Sheer Scale of Resources

The computational and energy requirements for training a large, complex model are already astronomical. For example, a GPT-3 model with 175 billion parameters consumed 1,287 MWh of energy. Now, the development of AGI would require an even greater level of computational power that dwarfs anything we have today.

Further, this presents a twin crisis: a logistical one of infrastructure and a serious environmental one. Some estimates suggest that data centers could account for a significant portion of global carbon emissions. Secondly, scaling this up for AGI development would be unsustainable without a radical breakthrough in other technologies, such as quantum computing, or a fundamental change in how we design algorithms.

2. Ethical Challenges of AGI Development

Now, how do we make AGI safe? And how do we ensure that AGI's goals align with human values? This is also termed as an alignment problem. The real fear is an AI that pursues its goals single-mindedly, causes unintended harm, and ignores human values it was never taught.

Challenge 4: Core Concept

Imagine tasking an AGI to "eliminate all hunger." A misaligned AGI, without the nuance of human ethics, might interpret this literally and conclude that the most efficient way to achieve this is to eliminate all life. It’s also termed as a paradox of a genie—granting a wish exactly as worded, with no regard for the spirit of the request.

Challenge 5: Ethical Programming

The next big question would be, whose ethics do we program into an AGI? Human values are not universal. They vary wildly across cultures, religions, and political systems. If a system is designed and developed by a small, homogenous group of developers in one country, it may embed their biases and values, leading to a digital colonialism where one worldview is hard-coded into a globally dominant intelligence. The challenge is to define a set of universal principles, if they even exist, that are fair, unbiased, and equitable for all of humanity. Furthermore, we must ensure AGI can perpetually comply with the complex, ever-changing web of human laws like GDPR and HIPAA.

Challenge 6: Control and Autonomy

The more intelligent and autonomous an AGI becomes, the more difficult it would be to limit it. Then arise questions like, how do you limit the system that is vastly more intelligent than its creators? Because in the future, the truly autonomous AGI could foresee attempts to control it and take action even to prevent them. This dilemma of creating a system powerful enough to be useful but at the same time, controllable enough to be safe is perhaps the most critical challenge for AGI governance.

3. Economic Challenges of AGI Development

The arrival of AGI can outspur a societal shockwave, questioning what happens if it arrives? It would send shockwaves through our economy, politics, and social fabric, creating a new reality we are currently underprepared for.

Challenge 7: Economic Upheaval

Eventually, AGI would be capable of performing complex cognitive work, like legal research, financial analysis, and software development. That too, at a near-zero marginal cost. This could lead to mass layoffs, unemployment, and a winner-take-all economy where the benefits of AGI flow to the owners of the technology. This can further exacerbate the wealth and income inequality to a degree never before seen.

Challenge 8: Social and Political Instability

The economic fallout could ignite social unrest and threaten democratic structures. Further, the powerful AGI, controlled by a handful of corporations or governments, could be used for mass surveillance, censorship, and political manipulation. Moving forward, the concentration of power could erode democratic checks and balances and create a society where citizens are governed not by elected officials, but by the will of a few tech titans who control the world's most powerful intelligence.

Challenge 9: The Intelligence Divide

The race for AGI is already a geopolitical contest. The country or corporation that achieves AGI first could gain an insurmountable advantage across various sectors, like economic productivity and intelligence. This would create a new kind of arms race and a massive global power imbalance, with the potential to destabilize international relations and create a world where a handful of nations or corporations dictate the future for everyone.

How to Overcome AGI Development Challenges?

The path to safe, beneficial AGI requires coordinated efforts across multiple fronts. It should follow more than basic AI ethics to become a trustworthy and responsible system. Let’s look at how technical solutions, inclusive development initiatives, and governance oversight can work collaboratively to solve it.

1. Technical Solutions

- Develop AGI systems with transparent decision-making processes that humans can understand and audit in real-time.

- Implement staged rollouts of AGI features, allowing society to adapt and establish safeguards before full deployment.

- Create redundant AI safety mechanisms that operate independently, ensuring no single point of failure can compromise the entire system.

- Build systems that can learn human values through observation and interaction rather than rigid programming.

- Design systems that can update their ethical reasoning as human values evolve.

2. Inclusive Development Practices

- Include voices from diverse cultures, communities, and disciplines in AGI development from the earliest stages.

- Regularly evaluate how AGI development affects different populations and adjust approaches accordingly.

- Foster transparent, international cooperation on AGI safety research while managing competitive pressures

- Establish clear lines of responsibility and remediation processes when AGI systems cause harm.

3. Governance and Oversight

- Develop global standards and oversight bodies for AGI development and deployment.

- Anchor AGI ethics in internationally recognized human rights principles as a baseline.

- Mandate third-party evaluation of AGI systems before deployment.

- Establish rapid response mechanisms for addressing AGI malfunctions or misuse.

- Invest in widespread digital literacy and AGI awareness programs to enable informed public participation,

AGI: A Partner or a Force Beyond Our Control?

The path to AGI is a journey that will test the very foundations of human ingenuity and ethics. Most importantly, the creation of a truly intelligent machine hinges on our ability to overcome profound technical, ethical, and societal challenges by teaching it common sense to align its goals with ours.

Currently, the race to achieve AGI between Claude AI, ChatGPT, and Google Gemini is at its peak. Whether one of these three parties will win or a new brand will emerge as the leader, that's a question only time will answer. Additionally, it will be fascinating to see how these challenges are tackled, whoever tackles them.

Some global AI leaders, like OpenAI's CEO, Sam Altman, believe AGI could be reached within the next few years, arguing that progress is accelerating. Demis Hassabis, CEO of Google DeepMind, believes AGI could be possible in five to ten years. Contrastingly, leading AI researcher Andrew Ng is far more skeptical, estimating AGI is still "many decades away,". He emphasized that significant scientific breakthroughs are needed, not just more data and computing power.

In conclusion,

The challenges in achieving AGI are stretched across technical, ethical, and economic aspects and cannot be solved by technologists alone. They require a collaborative approach involving ethicists, policymakers, economists, and the public to establish the global guardrails needed for AGI development. The choices we make now will determine whether we create a future of unprecedented prosperity or a force beyond our control.

Frequently Asked Questions

-

What are the potential risks of AGI?

-

What ethical challenges are associated with the development of AGI?

-

What is AGI vs AI?

-

Is ChatGPT AGI or AI?

-

Is AGI even possible?